I’m happy to report that the latest code we’ve added, which adds OpenStack support to Aeolus and will ship with our next release, is working successfully with HP Cloud, expanding our repertoire of public clouds.

While the support should allow us to support all OpenStack-based public cloud providers, in practice the APIs various providers expose are often mutated enough to prevent them from working. Rackspace, for example, has modified its authentication API enough to prevent authentication with Deltacloud today. I had similar issues trying Internap’s AgileCLOUD, which is using the hAPI interface that Voxel provided. (I understand that there’s a proper OpenStack environment in the works, though.)

But enough about what doesn’t work — HP’s cloud service does work! Getting it set up took a little figuring out, though, so I wanted to share some details.

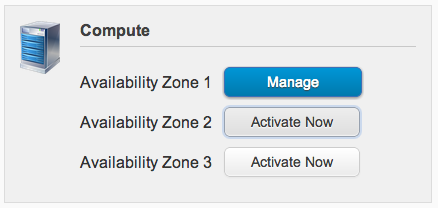

First things first, you’ll need to activate one or more of the Availability Zones for its Compute service:

Until at least one is activated, you’ll have a tough time authenticating and it won’t be apparent why. (Or, at least, this was my experience.)

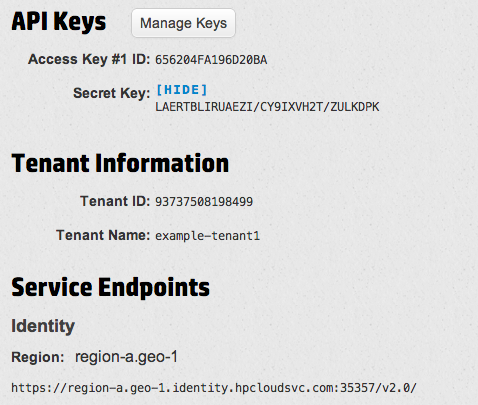

Once in, you’ll want to head over to the API Keys section to (you guessed it) get your API keys. Here’s an example of what it might look like (with randomized values):

(Just to be clear, the keys and tenant information were artificially-generated for this screenshot.)

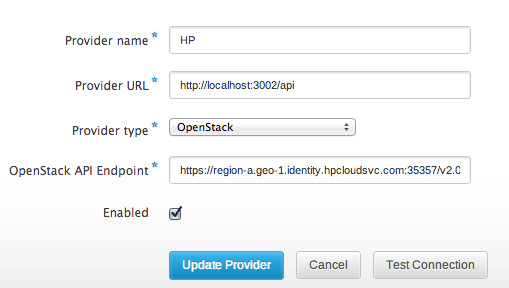

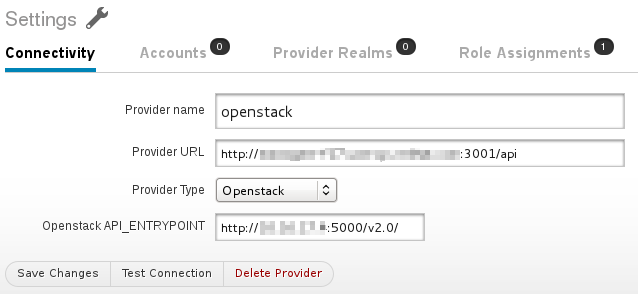

At the bottom is the Keystone entrypoint you’ll want to put in to set up the Provider:

This much is straightforward. Adding a Provider Account is a little more of an adventure.

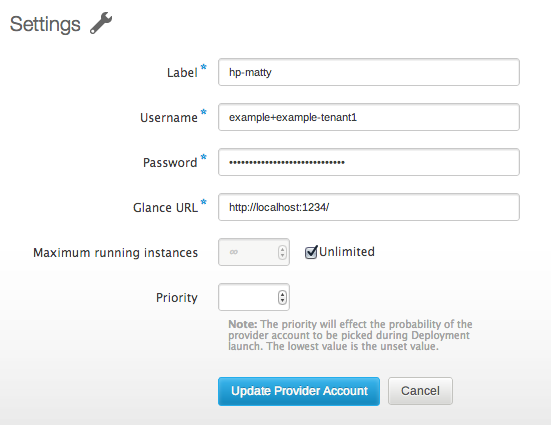

Despite what their documentation may say, the only way I’ve been able to authenticate through Deltacloud has been with my username and the tenant name shown — not the API keys, and not the Tenant ID.

In the example above, my Tenant Name is “example-tenant1”, with a username of “example”. So in Conductor, I’d want to enter “example+example-tenant1”, since we need to join username and tenant name that way. Password is what you use to log into the account.

Here you’ll notice that I cheat — Glance URL is currently a required field in Conductor. As best as I can tell, HP Cloud does not currently expose Glance to users, so there is not actually a valid Glance URL available. I’ve opened an issue to fix this in Conductor, but for right this second I just used localhost:1234 which passes validation.

As this may imply, we don’t presently support building images for HP Cloud, either, though there’s work being done to allow snapshot-style builds (in which a minimal OS is booted on the cloud, customized in place, and then snapshotted). What does work today are image imports, though.

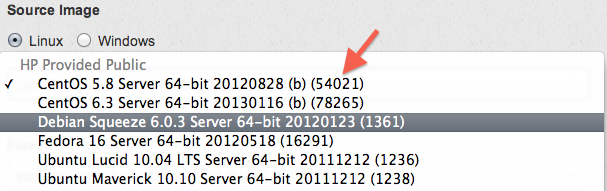

It took me a moment to figure out how to import a reference to an HP Cloud image, though. If you view the Servers tab within an Availability Zone and click “Create a new server from an Image”, you’ll get a dialog like this:

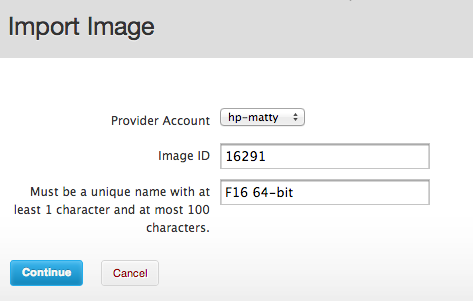

The orangey-red arrow point to the image ID — 54021 for the first one, 78265 for a CentOS 6.3 image, etc. These integers are what you enter into Conductor to import an image:

With an image imported, the launch process is just like with other providers, and you’ll be able to download a generated keypair and ssh in.

Of course, the job isn’t finished. The ability to build and push images is important for our cross-cloud workflow, and it’s something that’s in progress. And the Glance URL process is quite broken. But, despite these headaches, it works — I’ve got an instance running there launched through Conductor.