A few weeks ago, I spent two and a half weeks in Hong Kong, preparing for, and then participating in, OpenStack Summit.

There, at the Red Hat booth, we did a live demo of using TripleO to deploy an 8-node overcloud. I arrived a week before the demo and worked with the fine folks at Tech-21 Systems to get everything installed. (They set up all of the hardware, provided by Quanta, and graciously let me use their office while I did the software setup. They even took me out for authentic dim sum.)

The demo

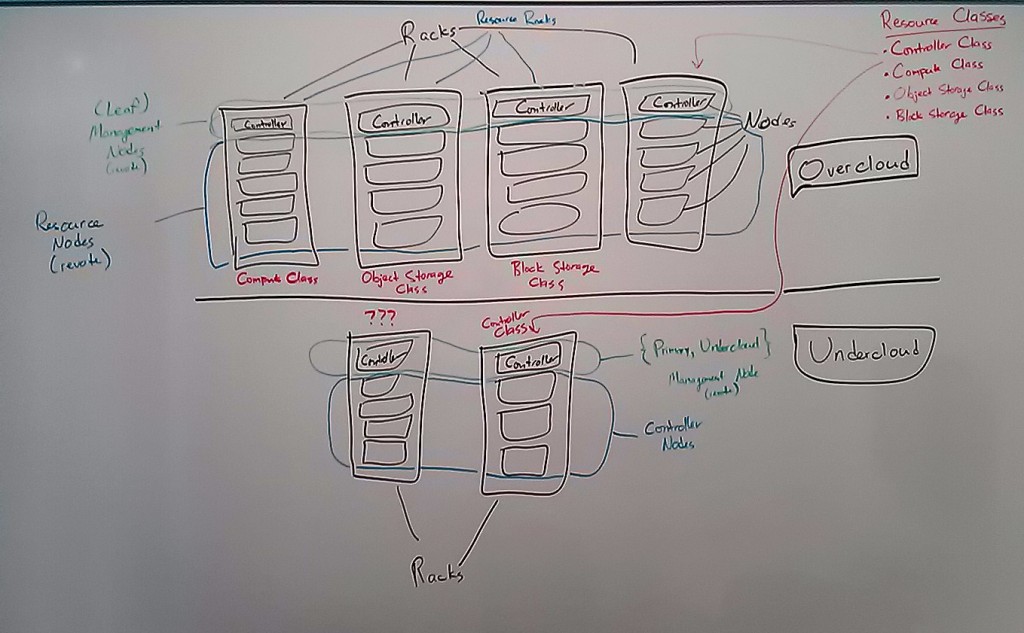

The rack of gear had 11 servers. I set one up as a utility machine (essentially a router / bastion host), and then we had a control node, a leaf node, and eight resource nodes that would be provisioned as the overcloud. The images we used are available here. Basically I booted the Undercloud-Control and Undercloud-Leaf images, did some quick configuration, imported the other images into glance, registered the other systems, and provisioned the machines. (In fairness, there’s still a lot of work to be done to make things go smoothly.)

While I gave live demos, we also prepared a recorded video of the process, in case the demo gods chose to smite us. Here’s the video:

Performance and scalability

Our demo involved a single controller node, and a single leaf node. The idea was to model a much larger deployment, where you’d have a central controller and then one leaf node per rack. The leaf node takes commands from the controller, and handles provisioning the machines in its rack. That makes keeping racks on isolated L2 domains easy, but also helps ensure things would scale. In our setup, with just one leaf node and one controller, the leaf node was not necessary.

Provisioning 8 nodes took us 10-15 minutes. Early on, we were seeing deploy times closer to 30 minutes, until I realized that the NIC used for provisioning had come up at 100 Mbps instead of Gigabit. Fixing that made a huge difference—pushing out the image jumped from about 80Mbps to about 800 Mbps. (I’m eager to see what happens with 10Gb Ethernet!)

10-15 minutes to provisioning eight machines seems pretty fast overall, but there’s still plenty of room to make this much faster. A surprising amount of the time was spent just waiting for machines to POST. (Stuff like getting the boot order right is also important in keeping this under control.) One problem I found is that the image deploy process from the leaf node seems to happen serially, rather than doing all of the machines in parallel. We were more or less bottlenecked on the network speed, so parallelizing that wouldn’t have necessarily led to a massive improvement, but it’s something that should be fixed.

OpenStack Summit in general

There were something like 3,500 people in attendance. I knew OpenStack was a substantial project, but seeing the crowd—and knowing that many of them travelled literally thousands of miles to be there—really drove home just how enormous the community is, and how quickly it is growing. And there was certainly no lack of enthusiasm.

I spent most of the Summit at the booth instead of in conferences. (We’ll have to work on this next time!) Most of the sessions were recorded, and can be watched here, though. Even not attending the sessions, it was a great opportunity to meet many of the people that I’ve only worked with online thus far.

Here’s a short video Red Hat put together of the Summit (in which I have a silent 3-second cameo—my big break into stardom, I think):

Fellow Red Hatter Steve Gordon captured a lot more of the Summit here.